Quantization noise

Image histograms can be used to spot important quantization errors arising from a reduction of bit resolution.

Quoting Wikipedia: When converting from an analog signal to a digital signal, error is unavoidable. An analog signal is continuous, with ideally infinite accuracy, while the digital signal's accuracy is dependent on the quantization resolution, or number of bits of the analog to digital converter. The difference between the actual analog value and approximated digital value due to the "rounding" that occurs while converting is called quantization error. <br><br>Quantization noise is a noise error introduced by quantization in the analogue to digital conversion (ADC).

This quantization error is unavoidable during acquisition because of the limitations of the ADC, but should not be increased during data processing while converting the image to another data type. During any change of color depth (bits per pixel) round-off errors usually occur that can affect the information very much.

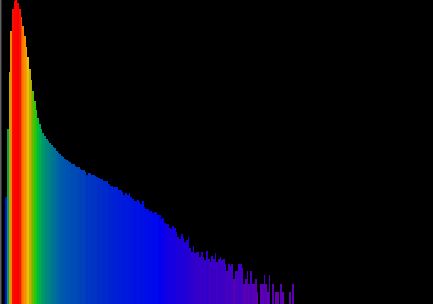

In ideal situations quantization noise during acquistion is uniformely distributed, and you can see that the Image Histogram varies in a smooth manner:

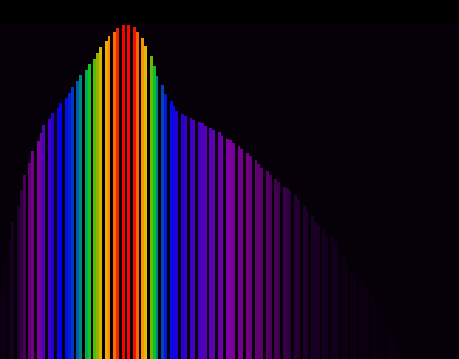

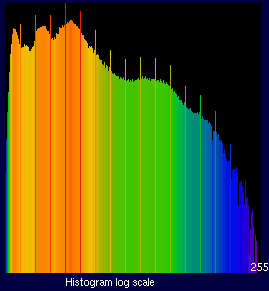

But after reducing a 12 bit image to 8 bit (byte), round off errors usually leave unused intensity values that manifest in the histogram as sharp gaps:

Alternatively, this problem can also manifest as equally spaced peaks in the histogram, because the round off errors give higher occurrence rate to certain values. (This is like taking the blocks in the previous case and shifting them close to each other reducing the gap. If you shift them so much that they overlap, the pixel count will suddenly increase in the overlapping histogram bins).

This should be avoided. A 12 bit image dataset is better stored in a 16 bit file "container" (despite some bits are unused, and the resulting file is larger) than in a narrower 8 bit file (where the required depth reduction will certainly introduce a lot of quantization noise).